As users prompt a TTI, how does the part-of-speech (POS) structure of their prompts change over time?

We all adapt our communication to match the person we're talking to. But how does this change if our conversation partner is not a person, but a generative AI model– one that doesn't speak in words, but in images? This project aims to answer this question by analyzing user prompts fed into MidJourney AI over a prolonged time period.

To begin this project, I aimed to establish an understanding of my research topic by reading the pre-existing literature on the subject. After curating a decent bibliography, I summarized my findings in a literature review paper. My literature review unveiled a few findings:

I continued reviewing more papers in the field to broaden my understanding, with the goal of implementing them in my second version of the literature review. As I continued reviewing the available literature, I formed a hypothesis that would guide my future research:

The dataset I analyzed was a table of user prompts that the participants fed into MidJourney. These prompts were extracted from comments made in Discord, a popular messaging app which served as the platform for this study. (Note: this study was initially conducted during the COVID-19 pandemic, hence the usage of a virtual platform.)

The data was 'cleaned' by removing empty cells, unnecessary symbols in the prompts (such as @#$%^&*), and deleting cells containing gibberish, where the contents of the original message had been corrupted due to the extraction process. I simply cleaned these cells by using aggregate methods in Excel to systematically delete these errors as needed.

Then, I created a program in Python using Pandas, Numby, and MatLib to extract the parts-of-speech (POS) tags from the now-cleaned prompts dataset. The program would read the dataset row-by-row and tag the prompt with appropriate POS tags. The program would then count the amount of POS tags that appear in the prompt and "tally" it up, which would then be exported into a .csv file.

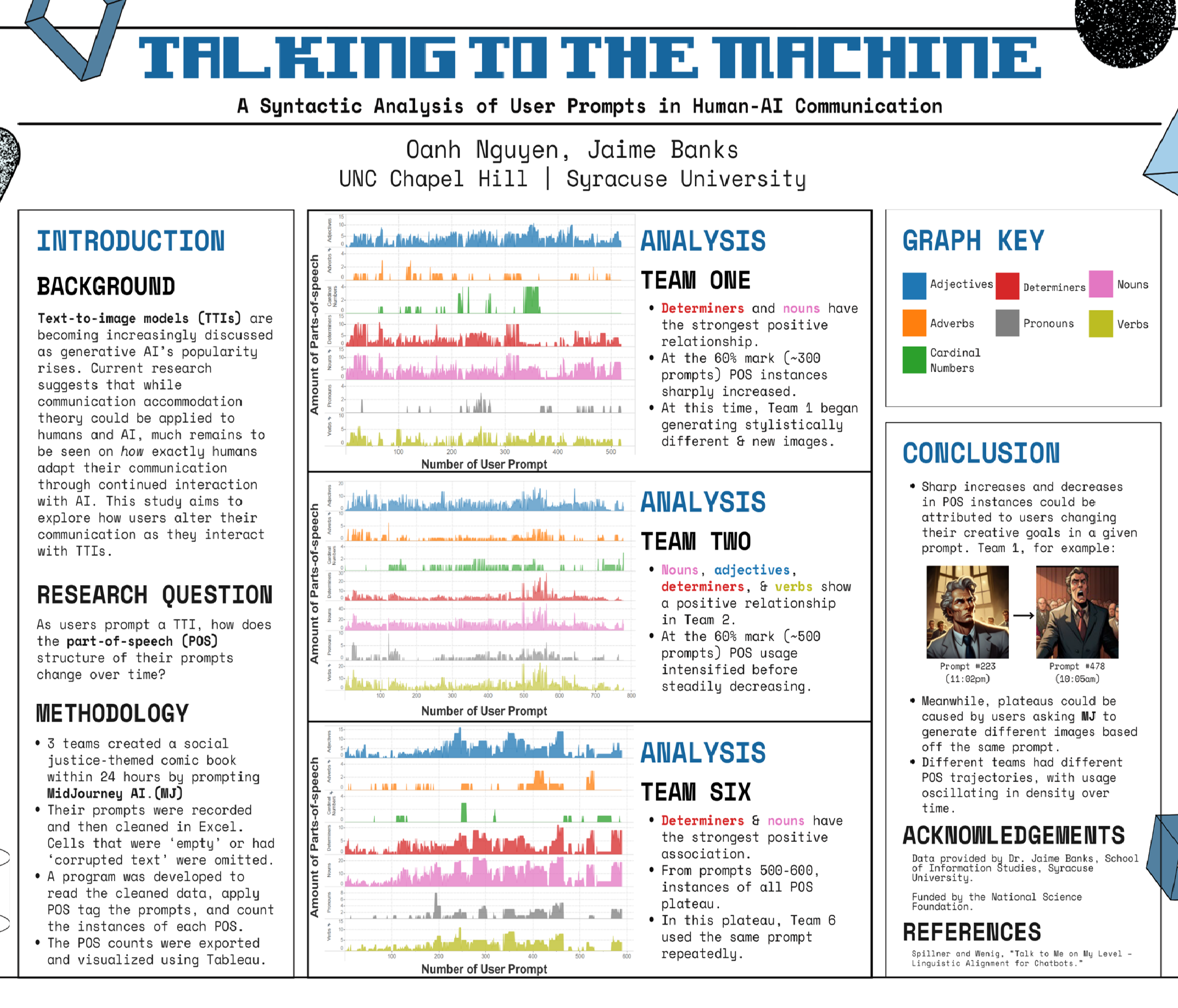

Finally, I visualized these changes with Tableau. I created tables displaying the changes in the amount of POS tags of user prompts over time for each of the 3 teams, which would later act as a foundation for my final analysis.

1# Reads "iDare01_Team1.csv", preprocesses punctuation, tokenizes words, uses POS tagging to assign

2# words as their grammatical function, implements a pandas dataframe, uses a function to define percent change

3# Created by Oanh Nguyen as part of the 2024 REU Program at Syracuse University.

4

5import nltk # imports nltk library

6import pandas as pd # imports pandas library as pd

7import matplotlib.pyplot as plt # importing matplotlib

8from nltk.tag import pos_tag # imports pos tag from nltk

9from nltk.tokenize import word_tokenize # imports word tokenizer from nltk

10from collections import Counter # imports counter function

11

12nltk.download('punkt') # downloads punkt package from ltk

13nltk.download('averaged_perceptron_tagger') # downloads perceptron tagger from nltk

14

15df = pd.read_csv("iDare_Team06.csv", usecols=['Content_Cleaned','Time','Date']) # reads csv file and uses content_cleaned, time, and date column

16

17df.drop([3,6,7], inplace = True) # drops user id, mentions, and link columns

18

19df['Content_Cleaned'] = df['Content_Cleaned'].astype(str) # converts content cleaned column to a string

20

21tok_and_tag = lambda x: pos_tag(word_tokenize(x)) # defines function that tokenizes comments and pos tags them

22

23df['Content_Cleaned'] = df['Content_Cleaned'].apply(str.lower) # makes content all lowercase

24df['tagged_sent'] = df['Content_Cleaned'].apply(tok_and_tag) # applies function tok_and_tag

25

26# print(df['tagged_sent'])

27

28df['pos_counts'] = df['tagged_sent'].apply(lambda x: Counter([tag for nltk.word, tag in x])) # counts number of given pos for given row

29pos_df = pd.DataFrame(df['pos_counts'].tolist()).fillna(0).astype(int) # fills number of counted pos

30pos_df.index = df.index # sets index of pos_df same as df

31

32# print(pos_df)

33

34time = df['Time'] # pulls from 'time' column of df

35date = df['Date'] # pulls from 'date' column of df

36

37pos_df.insert(0, 'Time', time) # adds time to leftmost column of pos_df

38pos_df.insert(1, 'Date', date) # adds date to second leftmost column of pos_df

39

40# print(pos_df)

41

42pos_df.to_csv('POS_Team6.csv', index=True) # exports result as a csv file -- make sure to change name!

43Based on my findings, it appears that humans may modify their communication syntax as interaction with text-to-image models continues. This conclusion is drawn from the provided Tableau charts, where the line graphs demonstrate "spikes" and "drops" in the amount of POS tags of prompts over time.

A closer look at the data reveals that these changes correlate with the images generated by the model. Team 1 underwent a stylistic change between Prompt #223 to Prompt #478, where they began with a semi-realistic style before ending in a more typical "comic" style. This correlates with the drastic changes in parts-of-speech as visualized in Team 1's graph.

While this project has revealed a potential insight into whether humans adapt their communication after interacting with generative AI models, it also poses several questions on the exact mechanisms that trigger these adaptations.

I presented this project at the 2024 National Student Data Corps Data Science Symposium as part of the Undergraduate Student Cohort. I won 3rd place overall for my efforts and I aspire to continue my work in the field of human-computer interactions research.

Future possibilities with this project include interviewing participants on the rationale behind their prompting formulas and perhaps conducting an "in-person" hackathon where I could observe the participants prompt MidJourney in real time.

Overall, this project has inspired me to continue my research work, leading me to register for an undergraduate honors thesis research course at UNC Chapel Hill for the 2025 fall semester. I hope to continue contributing to the human-computer interactions field overall to better our current understanding of human relationships with AI.